deleted by creator

deleted by creator

Is it directly exposed over the Internet? If you only port forward the VPN on your router, I wouldn’t worry about it unless you’re worried about someone else already on your LAN.

And even then, it’s really more like an extra layer of security against accidentally running something exposed publicly that you didn’t intend to, or maybe you want some services to only be accessible via a particular private interface. You don’t need a firewall if you have nothing to filter in the first place.

A machine without a firewall that doesn’t have any open port behave practically the same from a security standpoint: nothing’s gonna happen. The only difference is the port showing as closed vs filtered in nmap, and the server refusing to send any response not even a rejection, but that’s it.

It’s not impossible, been running my own email server for about 10 years and I inbox pretty much everywhere. I even emailed my work address and straight to inbox. I do have the full SPF, DKIM and DMARC stuff set up, for which I get notices from several email provides of failed spoof attempts.

Takes a while and effort to gain that reputation, but it’s doable. And OVH’s IPs don’t exactly have a great reputation either. Once you’re delisted from most spam databases / old spam reputation is expired, it’s not that bad.

Although I do agree it’s possibly one of the hardest services to self host. The software to run email servers is ancient and weird, and takes a lot to set up right. If you get it wrong you relay spam and start over, it’s rough.

Ordered two drives from them, came in very well packaged and even included the PWDIS adapter. Very good deals. Could throw the box across the yard and the drives would probably survive.

As a starting point. Are there any hardware recommendations for a toy home server?

Whatever you already have. Old desktop, even old laptop (those come with a built-in battery backup!). Failing what, Raspberry Pis are pretty popular and cheap and low power consumption, which makes it great if you’re not sure how much you want to spend.

Otherwise, ideally enough to run everything you need based on rough napkin math. Literally the only requirement is that the stuff you intend to run fits on it. For reference, my primary server which hosts my Lemmy instance (and emails and NextCloud and IRC and Matrix and Minecraft) is an old Xeon processor close to a third gen Intel i7 with 32GB of DDR3 memory, there’s 5 virtual machines on it (one of which is the Lemmy one), and it feels perfectly sufficient for my needs. I could make it work with half of that no problem. My home lab machine is my wife’s old Dell OptiPlex.

Speaking of virtual machines, you can test the waters on your regular PC by just loading whatever OS you choose in a virtual machine (libvirt if you’re on Linux, VirtualBox or VMware otherwise). Then play with it. When it works makes a snapshot. Continue playing with it, break it, revert to the last good snapshot. A real home server will basically be the same but as a real machine that’s on 24/7. It’s also useful to test things out as a practice run before putting them on your real server machine. It’s also give you a rough idea how much resources it uses, and you can always grow your VM until it fits and then know how much you need for the real thing.

Don’t worry too much about getting it right (except the backups, get those right, verify and test those regularly). You will get it wrong and eventually tear it down and rebuild it better what what you learn (or want to learn). Once you gain more experience it’ll start looking more and more like a real server setup, out of your own desire and needs.

I feel like a lot of the answers in this thread are throwing a lot of things with a lot of moving parts: Unraid, Docker, YunoHost, all that stuff. Those all still require generally knowing what the hell a Docker container is, how to use them and such.

I wouldn’t worry about any of that and start much simpler than that: just grab any old computer you want to be your home server or rent a VPS and start messing with it. Just pick something you think would be cool to run at home. Anything you run on your personal computer you wish was up 24/7? Start with that.

Ultimately there’s no right or wrong way to do things. It’s all about that learning experience and building up that experience over time. You get good by trying out things, failing and learning. Don’t want to learn Linux? Put Windows on it. You’ll get a lot of flack for it maybe, but at the very least over time you’ll probably learn why people don’t use Windows for server stuff generally. Or maybe you’ll like it, that happens too.

Just pick a project and see it to completion. Although if you start with NextCloud and expose it publicly, maybe wait to be more comfortable with the security aspect before you start putting copies of your taxes and personal documents on it just in case.

What would you like to self host to get started?

but I’m curious if it’s hitting the server, then going the router, only to be routed back to the same machine again. 10.0.0.3 is the same machine as 192.168.1.14

No, when you talk to yourself you talk to yourself it doesn’t go out over the network. But you can always check using utilities like tracepath, traceroute and mtr. It’ll show you the exact path taken.

Technically you could make the 172.18.0.0/16 subnet accessible directly to the VPS over WireGuard and skip the double DNAT on the game server’s side but that’s about it. The extra DNAT really won’t matter at that scale though.

It’s possible to do without any connection tracking or NAT, but at the expense of significantly more complicated routing for the containers. I would do that on a busy 10Gbit router or if somehow I really need to public IP of the connecting client to not get mangled. The biggest downside of your setup is, the game server will see every player as coming from 192.168.1.14 or 172.18.0.1. With the subnet routed over WireGuard it would appear to come from VPN IP of the VPS (guessing 10.0.0.2). It’s possible to get the real IP forwarded but then the routing needs to be adjusted so that it doesn’t go Client -> VPS -> VPN -> Game Server -> Home router -> Client.

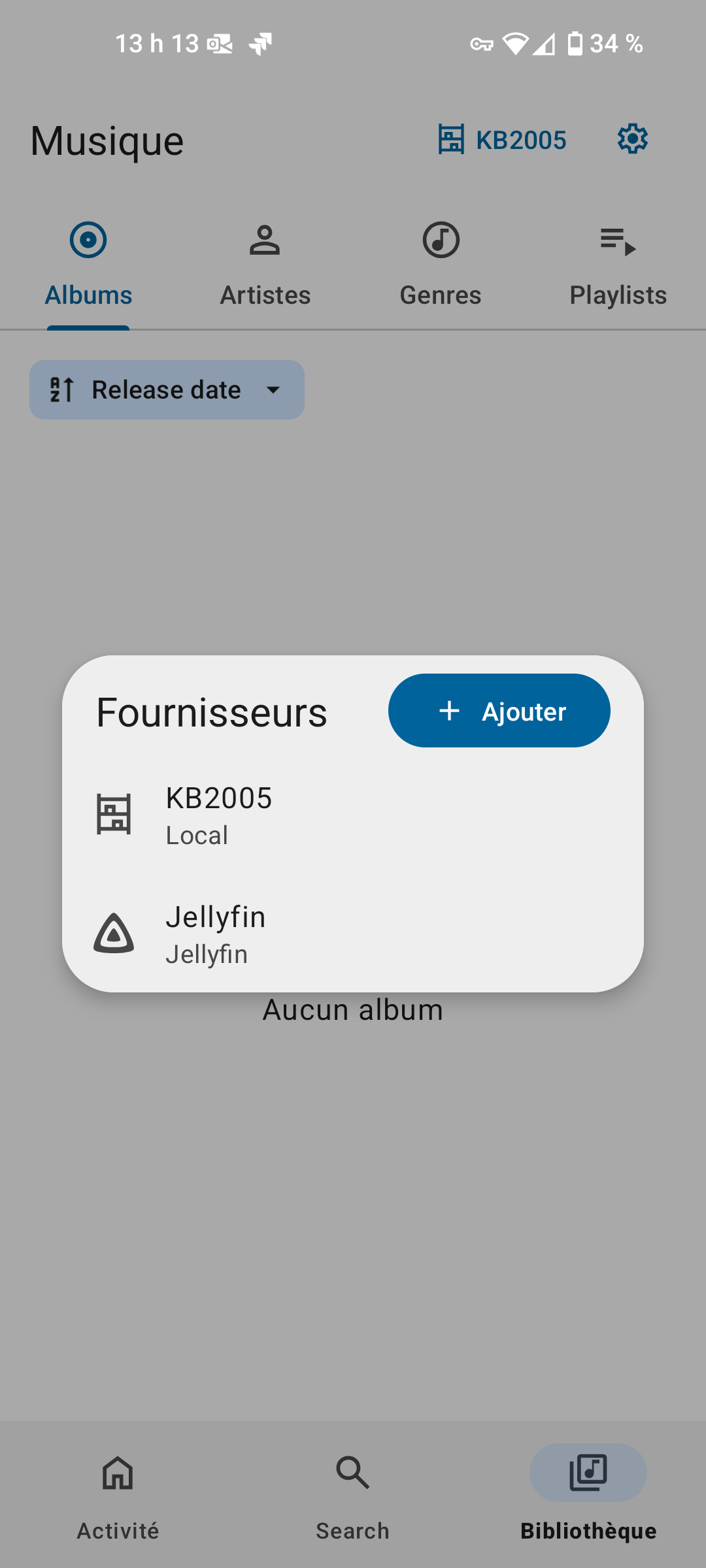

LineageOS’s default music app, Twelve, supports Jellyfin as a source:

As someone that admins hundreds of MySQL at work, I’d go with PostgreSQL.

To kind of visually see it, I found this thread of some guy that took oscilloscope captures of the output of their UPS and they’re all pseudo-sines: https://forums.anandtech.com/threads/so-i-bought-an-oscilloscope.2413789/

As you can see, the power isn’t very smooth at all. It’s good enough for a lot of use cases and lower end power supplies, because they just shove that into a bridge rectifier and capacitors. Higher end power supplies have tighter margins, and are also more likely to have more safety features to protect the PC so they can get into protection mode and shut off. Because bad power can mean dips in power to the system which can cause calculation errors which is very undesirable especially in on a server. It probably also messes with power factor correction circuits, which is something cheap PSUs often cheap out on but a good high quality one would have and may shut down because of it.

As you can see in those images too, it spends a significant amount of time at 0V (no power, that’s at the middle of the screen) whereas the sine waves spends an infinitely short time at 0, it goes positive and then negative immediately. All the time spent at 0, you rely on big capacitors in the PSU to hold enough charge to make it to the next burst of power. With the sine wave they’d hold just long enough (we’re going down to 12V and 5V from 120/240V input, so the amount of time normally spent at or below ±12V is actually fairly short).

It’s technically the same average power, so most devices don’t really care. It really depends on the design of the particular unit, some can deal with some really bad power inputs and manage just fine and some will get damaged over long term use. Old linear ones with an AC transformer on the input in particular can be unhappy because of magnetic field saturation and other crazy inductor shenanigans.

Pure sine UPSes are better because they’re basically the same as what comes out of the wall outlet. Line interactive ones are even better because they’re ready to take over the moment power goes out and exactly at the same spot in the sine wave so the jitter isn’t quite as bad during the transition. Double conversion is the top tier because they always run off the battery, so there’s no interruption for the connected computer at all. Losing power just means the battery isn’t being charged/kept topped off from the wall anymore so it starts discharging.

If you look at my username you’ll see I do run my own instance so I’ve gone through the process :)

I would probably just skip the Lemmy Easy Deploy and just do a regular deployment so it doesn’t mess with your existing. Getting it running with just Docker is not that much harder and you just need to point your NGINX to it. Easy Deploy kind of assumes it’s got the whole machine for itself so it’ll try to bind on the same ports as your existing NGINX, so does the official Ansible as well.

You really just need a postgres instance, the backend, pictrs, the frontend and some NGINX glue to make it work. I recommend stealing the files from the official Ansible, as there’s a few gotchas in the NGINX config as the frontend and backend share the same host and one is just layered on top.

Hasn’t cost me a penny, hurray for unmetered bandwidth

To add: a lot of cert providers also offer ACME so while the primary user of ACME is LetsEncrypt, you can use the same tech and validations as LetsEncrypt on other vendors too.

IMO a lot of what makes nice self-hostable software is clean and sane software in general. A lot of stuff tend to end up trying to be too easy and you can’t scale up, or stuff so unbelievably complicated you can’t scale it down. Don’t make me install an email server and API keys to services needed by features I won’t even use.

I don’t particularly mind needing a database and Redis and the likes, but if you need MySQL and PostgreSQL and Redis and memcached and an ElasticSearch cluster and some of it is Go, some of it is Ruby and some of it is Java with a sprinkle of someone’s erlang phase, … no, just no, screw that.

What really sucks is when Docker is used as a bandaid to hide all that insanity under the guise of easy self-hosting. It works but it’s still a pain to maintain and debug, and it often uses way more resources than it really need. Well written software is flexible and sane.

My stuff at work runs equally fine locally in under a gig of RAM and barely any CPU at idle, and yet spans dozens of servers and microservices in production. That’s sane software.

You just put both in the server_name line and you’re good to go.

Yep, and I’d guess there’s probably a huge component of “it must be as easy as possible” because the primary target is selfhosters that don’t really even want to learn how to set up Docker containers properly.

The AIO Docker image is an abomination. The other ones are slightly more sane but they still fundamentally mix code and data in the same folder so it’s not trivial to just replace the app.

In Docker, the auto updater should be completely neutered, it’s the wrong way to update the app.

The packages in the Arch repo are legit saner than the Docker version.

Yeah, that didn’t stop it from pwning a good chunk of the Internet: https://en.wikipedia.org/wiki/Log4Shell

IMO the biggest attack vector there would be a Minecraft exploit like log4j, so the most important part to me would make sure the game server is properly sandboxed just in case. Start from a point of view of, the attacker breached Minecraft and has shell access to that user. What can they do from there? Ideally, nothing useful other than maybe running a crypto miner. Don’t reuse passwords obviously.

With systemd, I’d use the various Protect* directives like ProtectHome, ProtectSystem=full, or failing that, a container (Docker, Podman, LXC, manually, there’s options). Just a bare Alpine container with Java would be pretty ideal, as you can’t exploit sudo or some other SUID binaries if they don’t exist in the first place.

That said the WireGuard solution is ideal because it limits potential attackers to people you handed a key, so at least you’d know who breached you.

I’ve fogotten Minecraft servers online and really nothing happened whatsoever.

I keep hearing claims that it’s not secure enough to be exposed on the Internet, but I can’t seem to find anything about unauthenticated vulnerabilities. It’s got a fair amount of CVEs but they all seem to affect when you’re an already authenticated user, mainly to XSS an admin as a regular user or the likes.

It’s written in C#, and publicly all you can do is pretty much attempt to log in, this feels like it should be pretty sane compared to some other PHP crap I run.

Do you have any examples of previous exploits or anything else to be concerned about?